After an exciting Day 1 and Day 2 at INTEGRATE 2021, the stage was perfectly set for the last day of the event.

Before you proceed further, we recommend you take a read at the following links – Day 1, Day 2.

On the third day of Integrate 2021, we kick-started the session with Dan Toomey, Senior Integration Specialist at Deloitte. He is also an Azure MVP, Microsoft Certified Trainer (MCT), and a published Pluralsight author. He extended his thanks to the following speakers

He gave an interesting session on how users can simplify the process of moving from BizTalk solutions to Azure Integration Services.

He listed the reasons why users migrate from BizTalk to Azure Integration Services

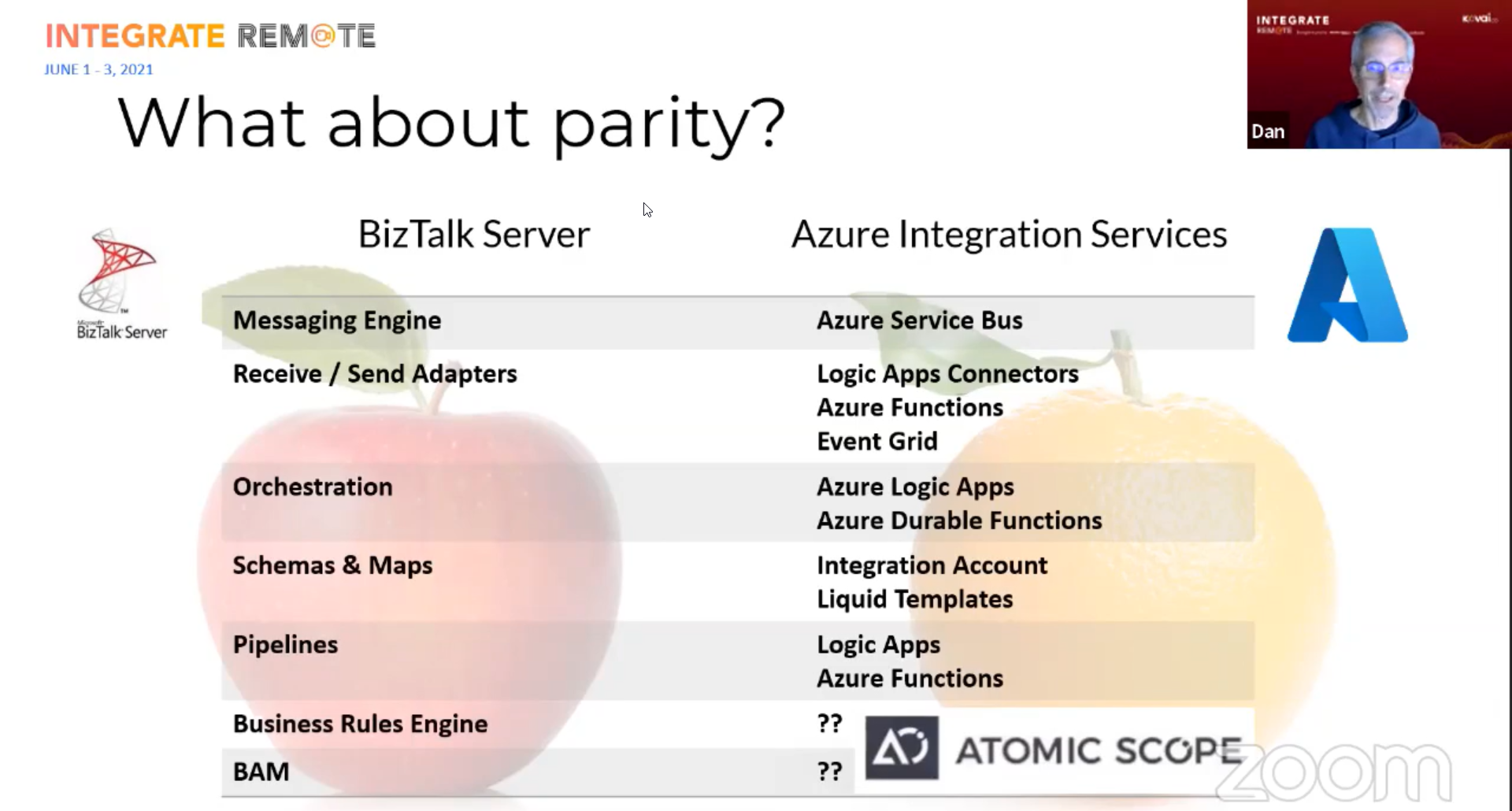

He emphasizes the key differences between BizTalk Server and Azure Integration Services. Also, in addition to that, he added a note for attendees about one of the products of Kovai.co – Atomic Scope which will be beneficial for users who are looking for monitoring and administrating Business Rules Engine and Business Activity Monitoring.

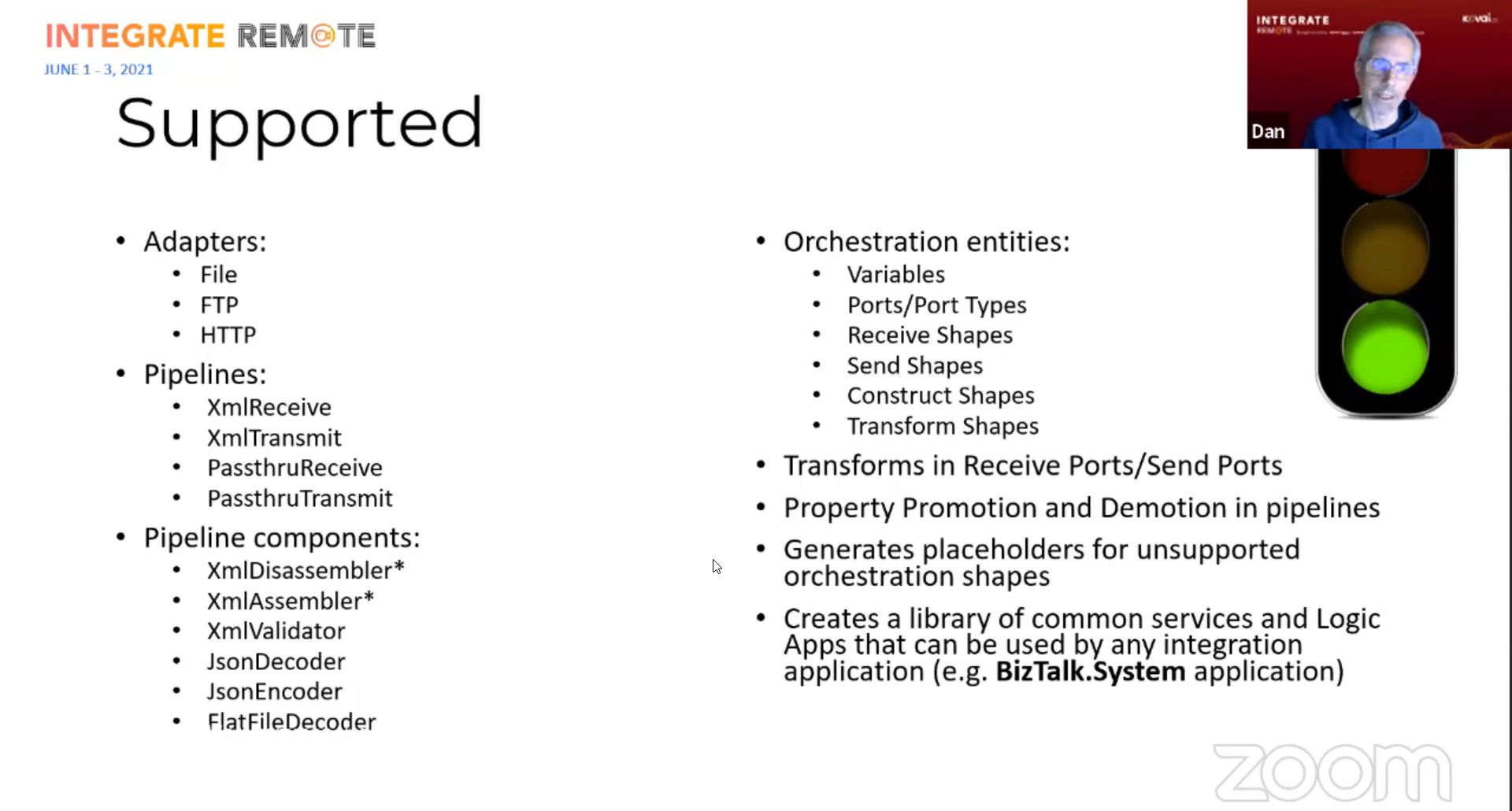

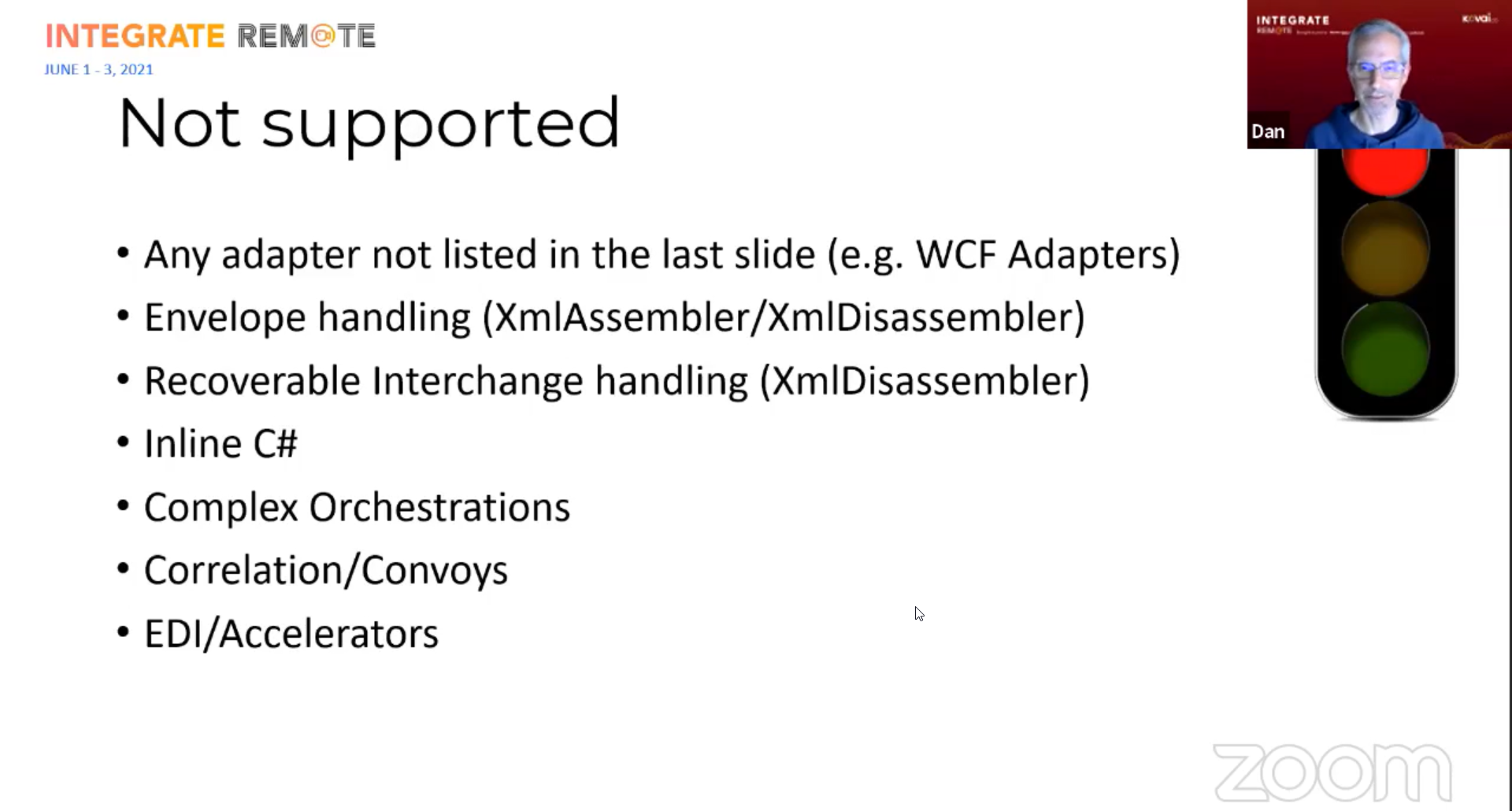

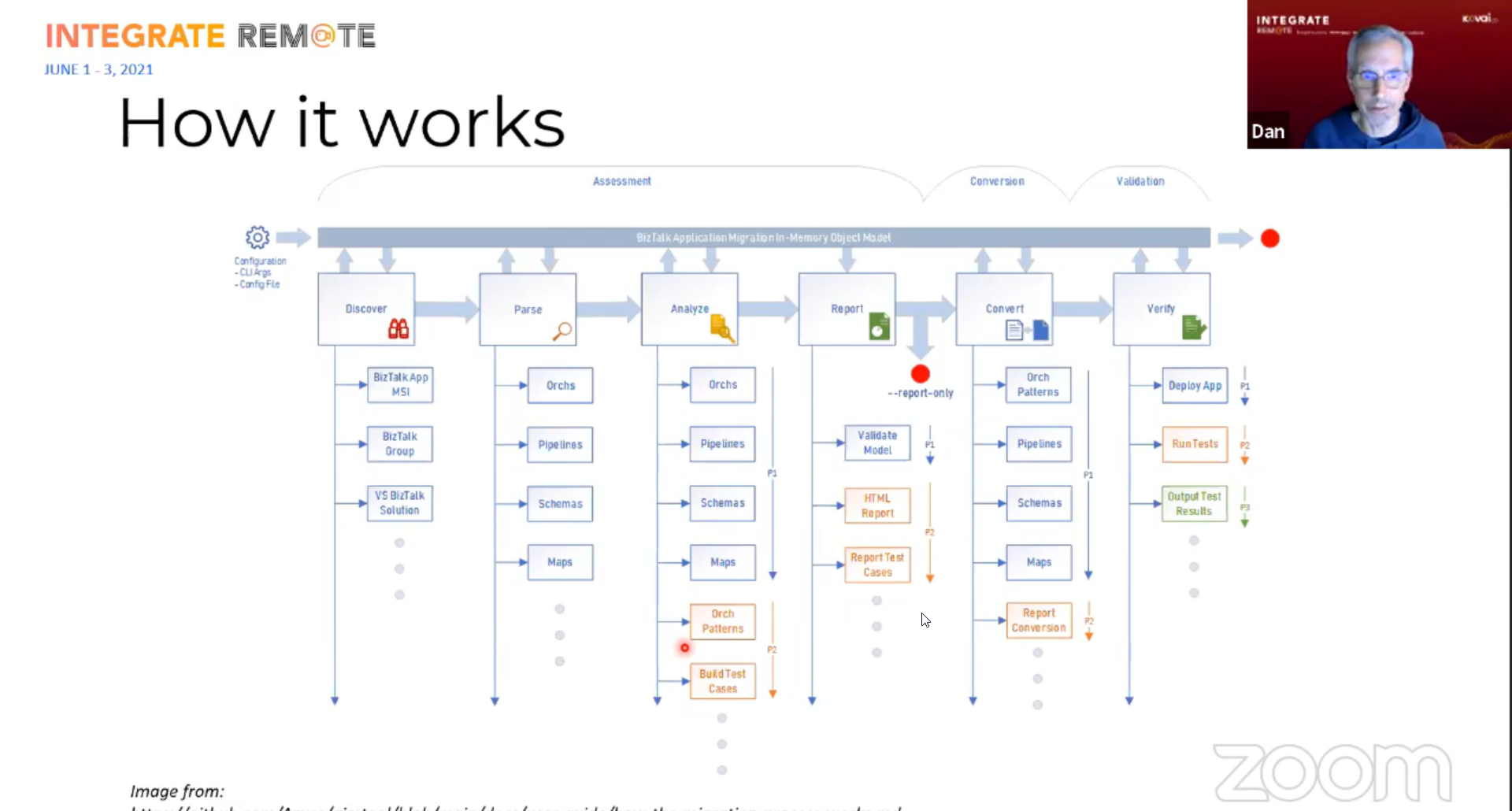

Dan explained about the command-line utility for migrating BizTalk applications to Azure Integration Services and the capabilities available

Dan gave a list of features that are supported and not supported for easy and quick migration

He recommended the following to be needed for easier migration

After installing the tool with Chocolately in PowerShell and convert it to an application. Furthermore, he showcased the scenario of how exactly it works via demo.

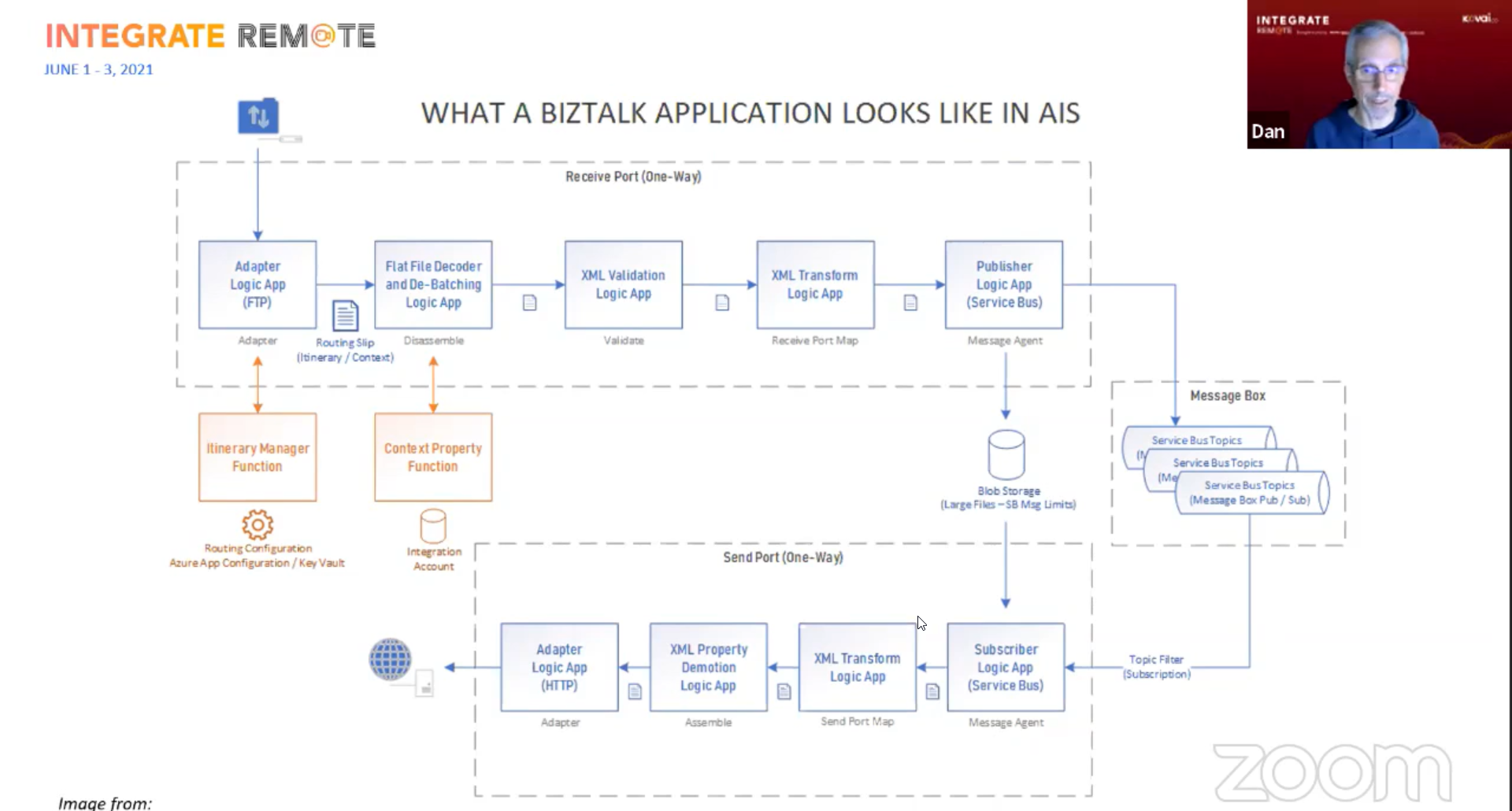

He explained in brief about how a BizTalk application looks like In AIS

Dan explained the key points like how a logic app structure looks like

He provided 4 sample scenarios that will be helpful for users

With the Demo on the above-mentioned scenarios, Dan completed the presentation, and the session ended with a Q&A session with a lot of insightful answers

and Business Activity MonitoringDerek Li, Program Manager at Microsoft, handled the session on “Advanced Integration with Logic Apps” at INTEGRATE 2021.

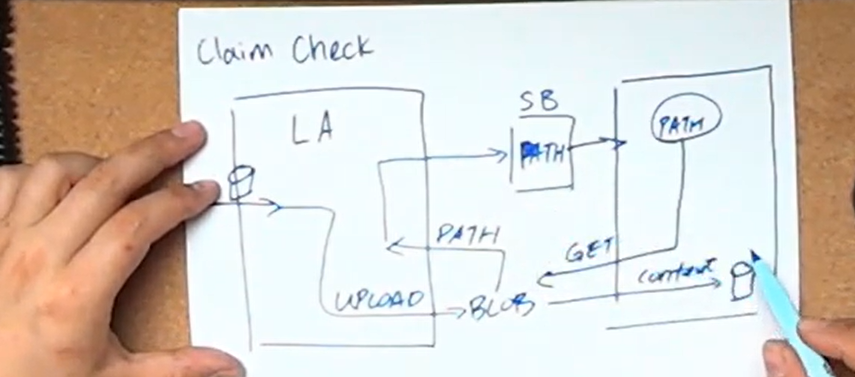

Derek started with messaging patterns, where he spoke about a scenario dealing with Service Bus connectors. The problem he addressed here is, the file size limit which is constrained to certain kilobytes, and it would be a hurdle for user who deals with files larger than that. He explained how it could get addressed with a message handling pattern called Claim Check. He implemented it with two Logic Apps as sender and receiver with a Storage Blob as intermediate.

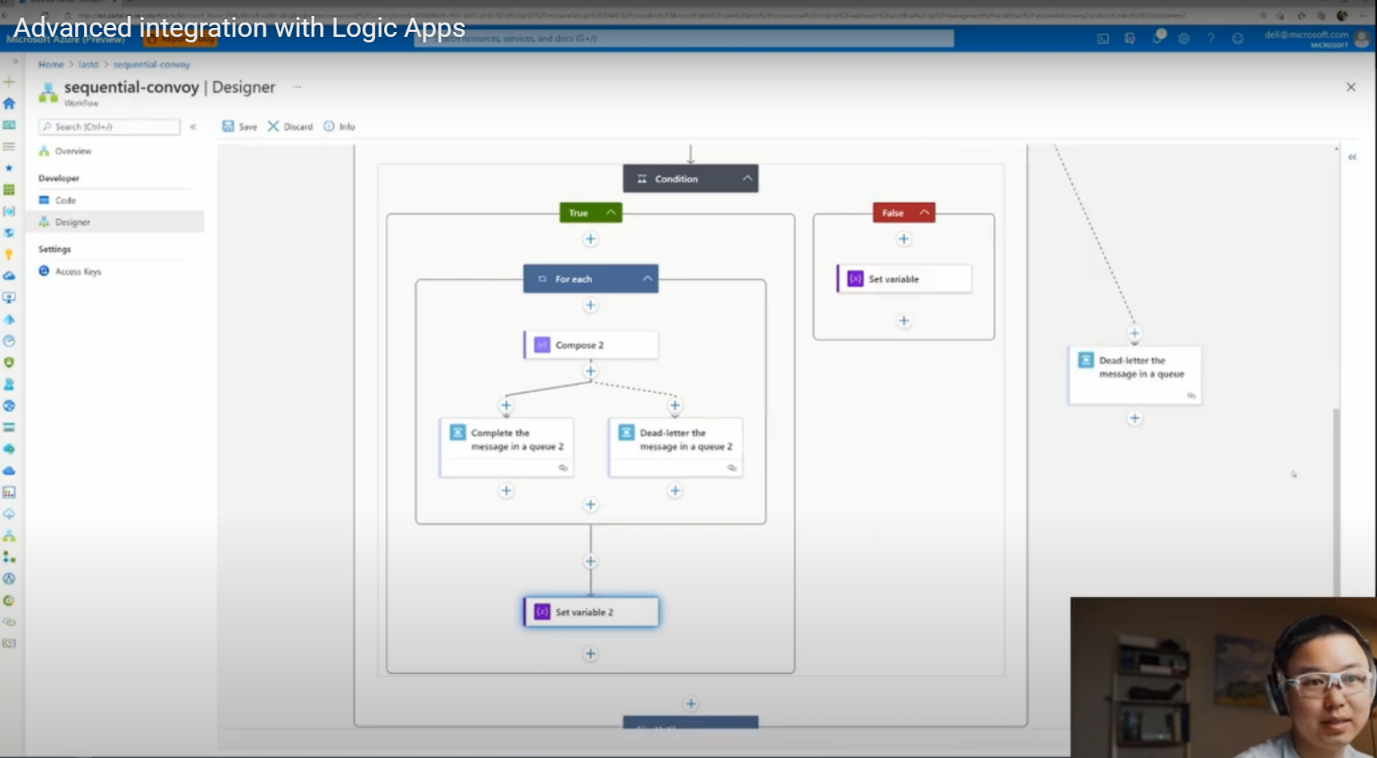

The next scenario he handled was an order processing of an e-commerce site where multiple tasks are involved in shipping and order. Derek explained how these various tasks, which must be repeated for each order, can be handled by a Sequential Convoy messaging pattern. He implemented it with dedicated Logic App runs for each order and the first message determines the Logic App run to be picked and processed.

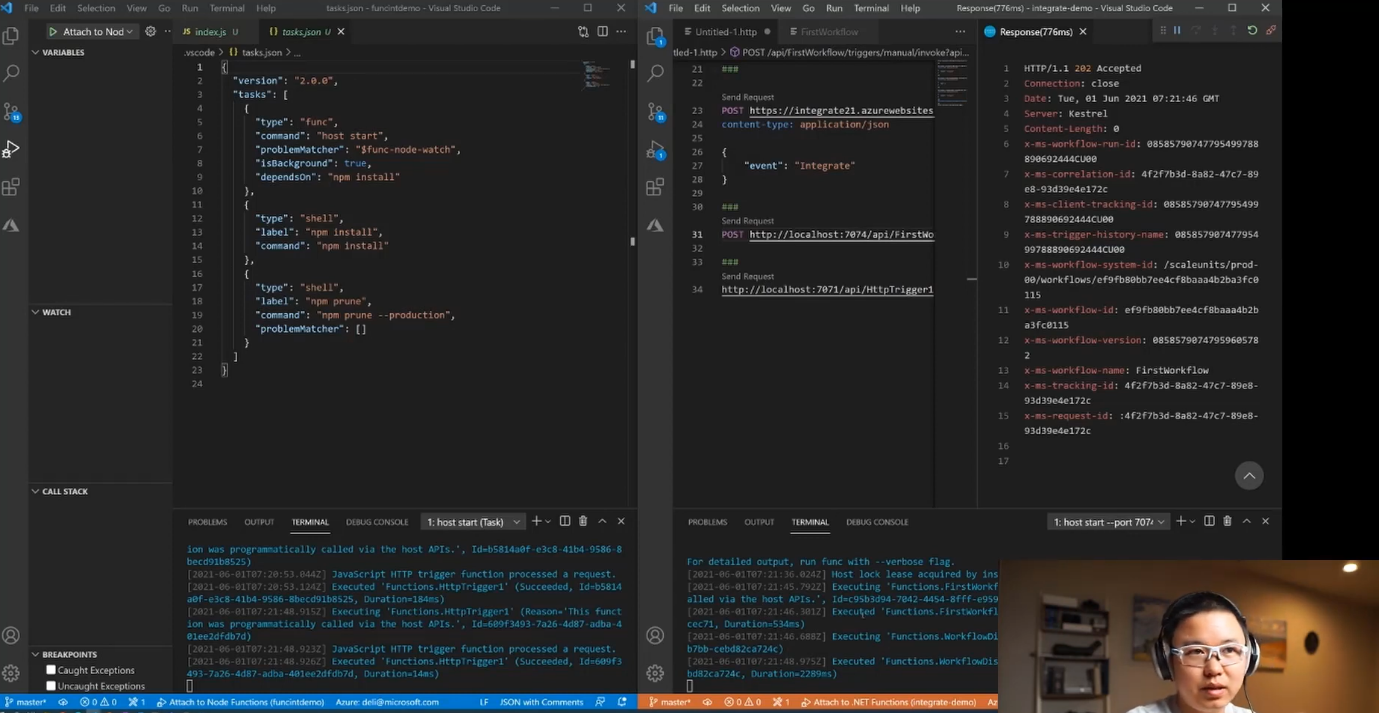

The next integration scenario was demonstrated on a local development experience that includes an HTTP Webhook capable of asynchronous processing. Derek brought in a scenario that he faced while doing it locally in his first day session where the host’s name generated was local host which would be a problem while calling from the internet. He demonstrated how the problem looks like and how it can be resolved.

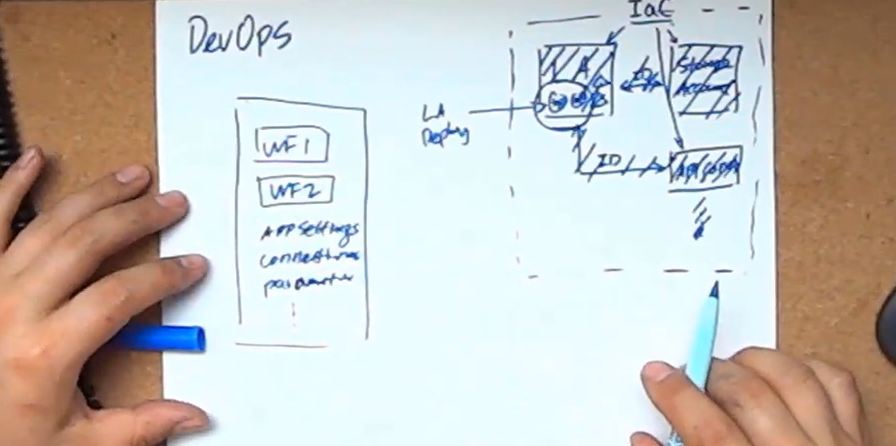

While dealing with the above scenario in local development, he faced another challenge that he wanted to explain as a separate part. During the previous demo with the workflow designer, Derek hardcoded the endpoint for the Azure Function. This will be a problem when he deploys the Logic Apps and Function App to the cloud, where he must substitute it. He further explained how this could set the way for the DevOps experience with Logic App Standard Runtime.

He further showed a diagrammatic explanation of the DevOps experience, followed by a demo on implementing it. Finally, he winded up by explaining the concept of introducing Workflow parameters in Logic App Standards to resolve the issue.

Altogether it was an engaging session with many real-time scenarios that can get resolved by advanced integration in Logic Apps.

Mattias Logdberg (Microsoft Azure MVP), DevUP Solutions took a deep dive session on some of the most used Azure messaging services.

He started with stating that “Messaging is when someone (A) sends a message via Queues to the receiver (B), where the Queue makes it possible for the consumer to be off grid for some time which means that A doesn’t know that B exists”.

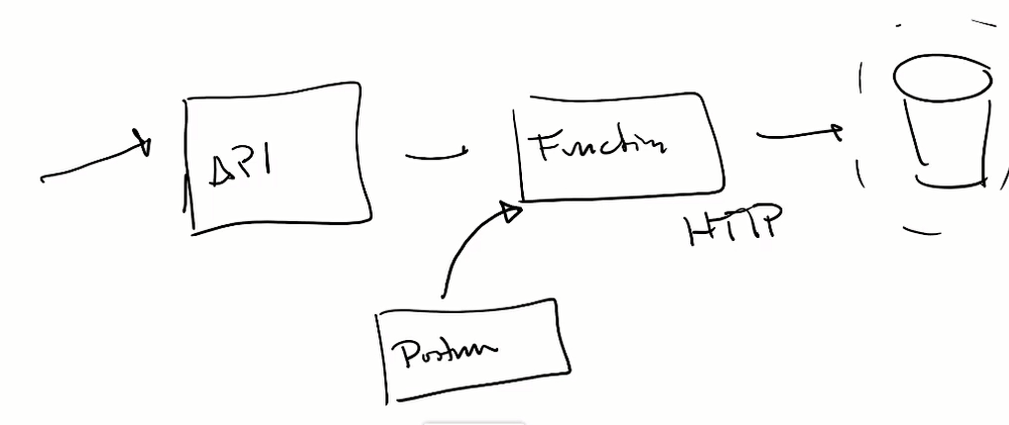

Then comes a demo elaborating on how Service Bus Queues can increase the reliability on messages and shows how it reduces the overall failure rate. First, he begins with a scenario of building a solution using APIs, Functions and Cosmos DB (Receiver) where postman is being used to pump messages to the API and everything is done via HTTP here.

Since there would be lot of messages coming into Cosmos DB which can only accept a particular limit depending upon the plan you have, there is a chance of having a high failure rate if you don’t scale up the service at the right time and this might also end up in having a lot of retries for successfully processing the messages.

This is when Queues can help to reduce the failure rate even when the incoming request is spiking up,

To obtain the above result, he added a Service Bus Queue and an Azure Function in the solution he has built, so now the messages will be sent from the receiver to the Queue and then reaches the endpoint (Cosmos DB).

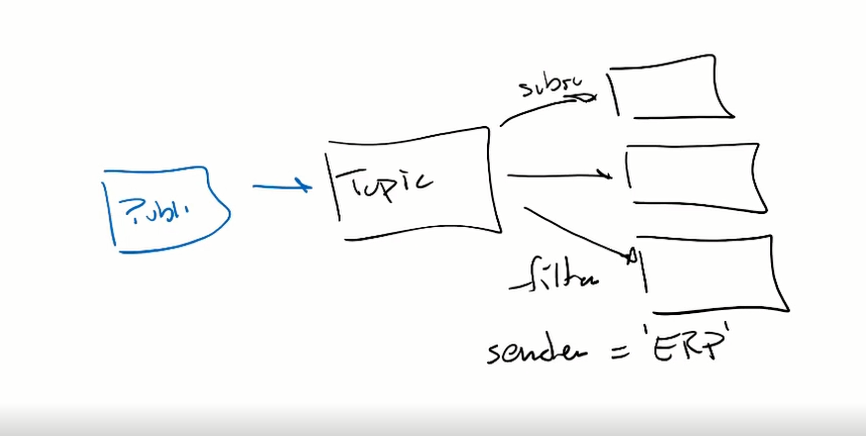

Then, he explained the concepts of Service Bus Topics and Subscriptions where he also showcased how to send messages via them using the Service Bus Explorer. Topics are one of the best Pub/Sub models which can be best used if your need is to send messages to multiple receivers.

It is a one-to-many model which follows push forwarding where if you send an event to the Event Grid, it makes sure that the event is sent to all of the receiving parties.

He also shows how to set up Event Grid Topics and Subscriptions in the Azure Portal with a step-by-step demonstration.

Then he talks about dead lettered events and messages and says that there is a difference in Service Bus as you are in control because when a message is dead lettered after a specific count of retires, you can have it in a specific place for further processing.

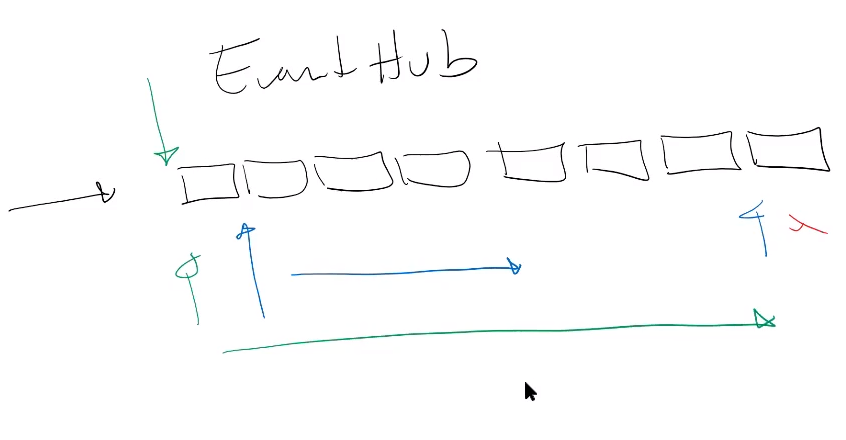

It is working more of an event stream where you are building a log and it is a very good option when you are ingesting a large volume of data.

The main difference between Event Hub and other services is that you can go in and read the data at any point when an error occurs, and you can also read the data in the Event Hub from the beginning when you want to want to understand why the error actually occurred.

Nino Crudele, Solution Director at Hitachi Solutions, one of the frequent speakers at Integrate gave a delightful session on the much-needed topic Security baseline for Integrations.

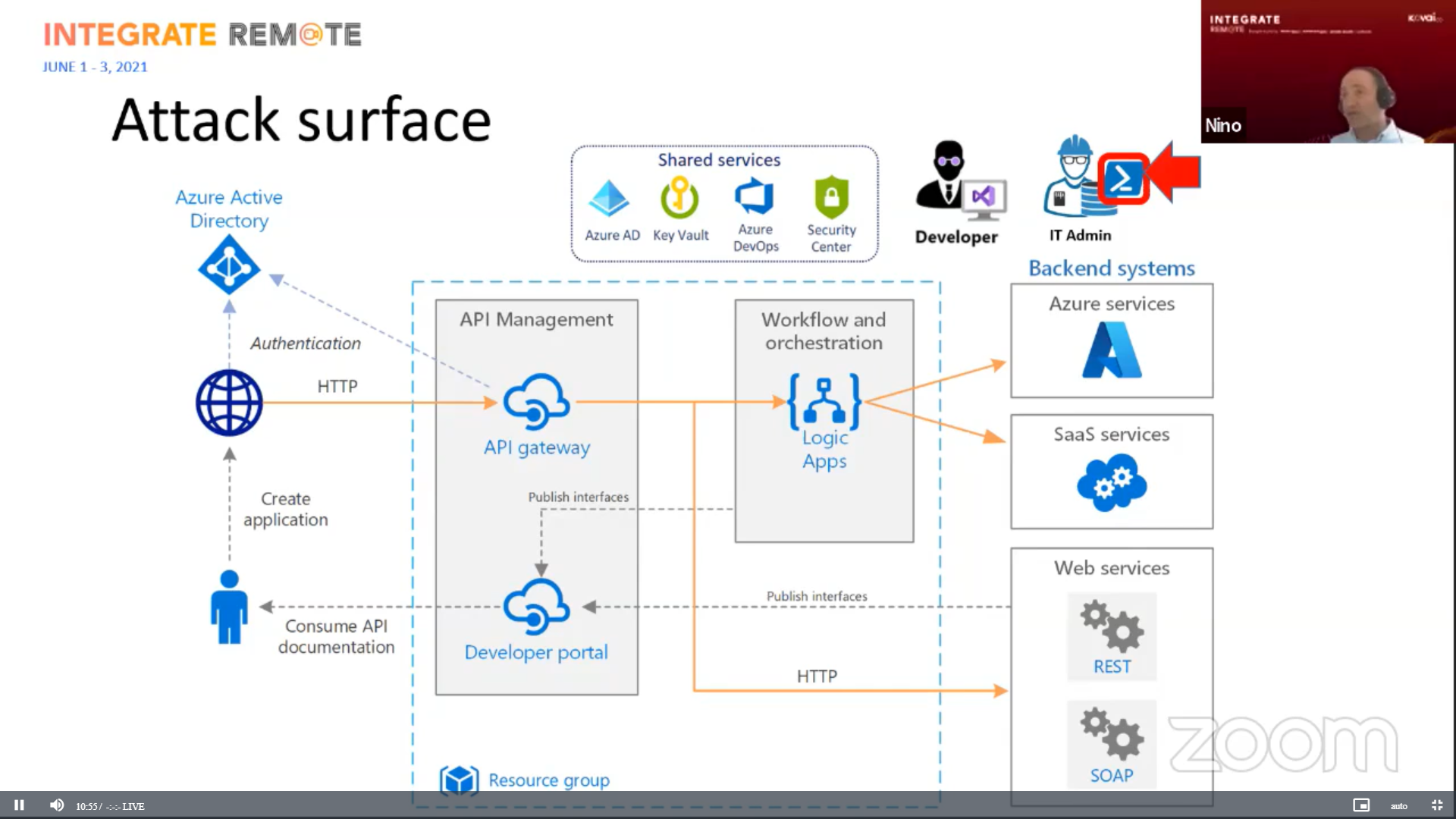

Nino started the session by expanding how important Security is in cloud space. In Azure, Cloud shell is one of the powerful and most used tool or platforms to deploy or do any activity in Azure. But Nino says, cloud shell is one of the weakest points of Azure and hacks/attack can happen more successfully in PowerShell.

To reduce these risks, Nino suggests the following ways or approaches available in Azure. Most of these approaches uses OAuth 2.0 security protocols.

For Logic apps the below security measures can be implemented to reduce the risk from External attacks

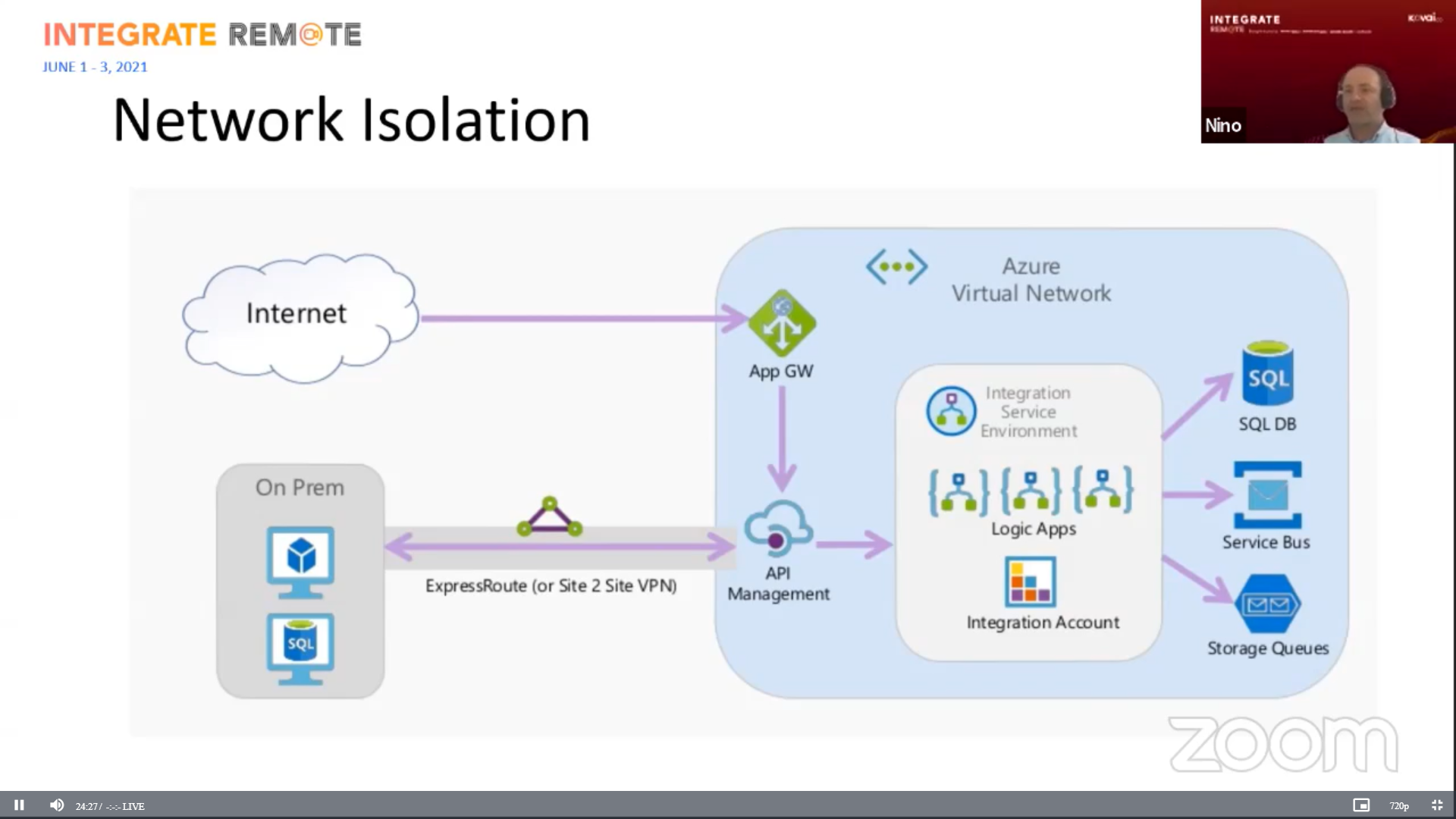

If you need to make sure all the resources your organisation use, then Nino suggests using Network Isolation. In Network Isolation, a Vnet will be used to isolate your application in a subscription or many from external connections. To make any specific connections to resources inside a Vnet, VPN access should be made mandatory to form a best possible security wall.

To protect from Internal attacks, Nino suggests using Azure Managed Identity. Instead of going fully dependent on Key Vaults, Azure Managed Identity provides a one more level of security by combining the Key vault and Azure Identity key.

For Logic apps the below security measures can be implemented to reduce the risk from External attacks

The best practices, covered in the session can also be implemented on other resources based on the nature of the resource. Additionally, you can use Policies, to meet the security standards. Remember always trust no one and make the security wall as strong as possible and wherever possible.

Nino shared a very hands-on experience to provide the best Azure security baseline for integration. The session examined the most crucial technology integration stacks like Logic App, Power Apps, Azure Functions, and many others. For each technology, Nino provided the best recommendations to deliver solid and secure integration solutions for Cloud and On-Premises Hybrid Integration scenarios.

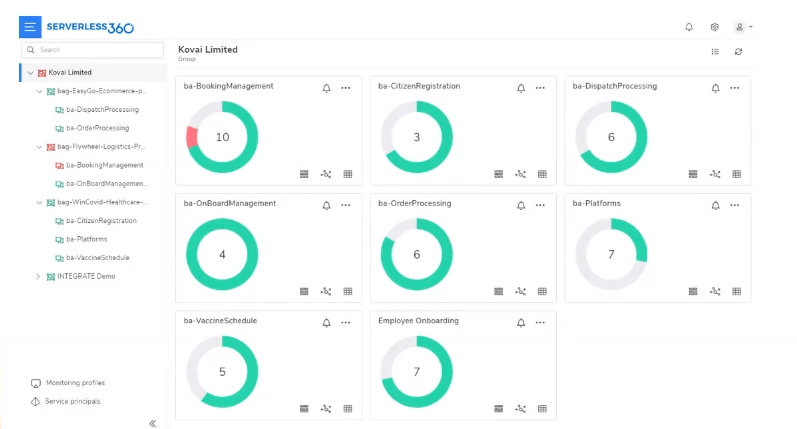

In this session, we had Saravana Kumar, CEO of Kovai.co exclusively showcasing the brand-new version of Serverless360 (v2), which is almost a complete revamp of the current product.

He started with a small introduction to Serverless360, which was initially called ServiceBus360 (primary focusing on Service Bus). Over the years, the product developed, and it is now providing support to 28 different Azure resources.

Serverless360 now has three core pillars/features,

There might be scenarios when you have only a couple of Subscriptions in an enterprise but create multiple Azure resources in a single subscription itself. Sometimes, companies might also have 200+ Subscriptions and still find it challenging to manage the resources without a business application context.

Here is when this feature can help to logically group the Azure entities to represent your Line of Business applications

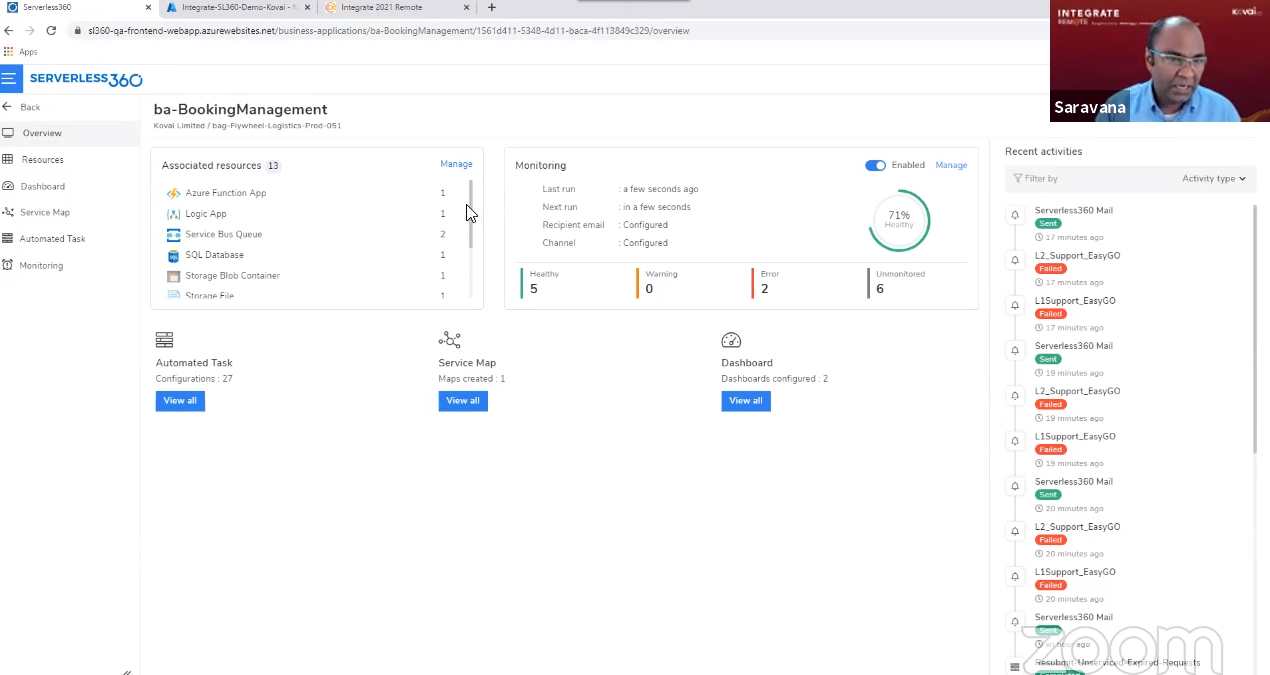

He then clearly showcased the difference between Azure Portal, which does not provide business application context and Serverless360, and a clear demo on configuring the Business Application feature.

The below image shows various resources like Logic Apps, Functions, Service Bus, etc., involved in an application being grouped for application-level management.

There were also various significant features covered in the session, including Dashboards, Service Map (to visualize the relation between entities), Automated tasks, Monitoring features, etc.

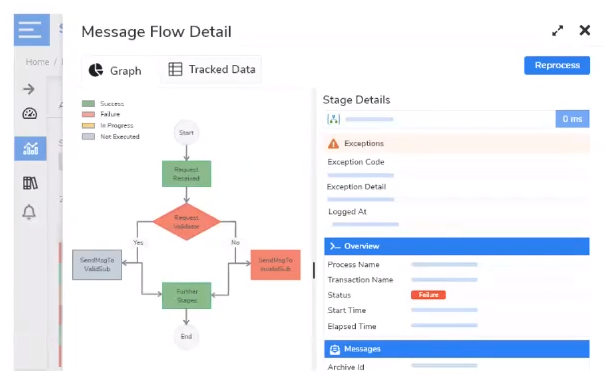

It is no longer needed to build a custom logging and tracking solution. This feature will provide end-to-end tracking and Monitoring on your business application, where it allows you to define your logical business processes and capture data points at various stages.

He then gave a complete demonstration for configuring and using this feature in a real-time application.

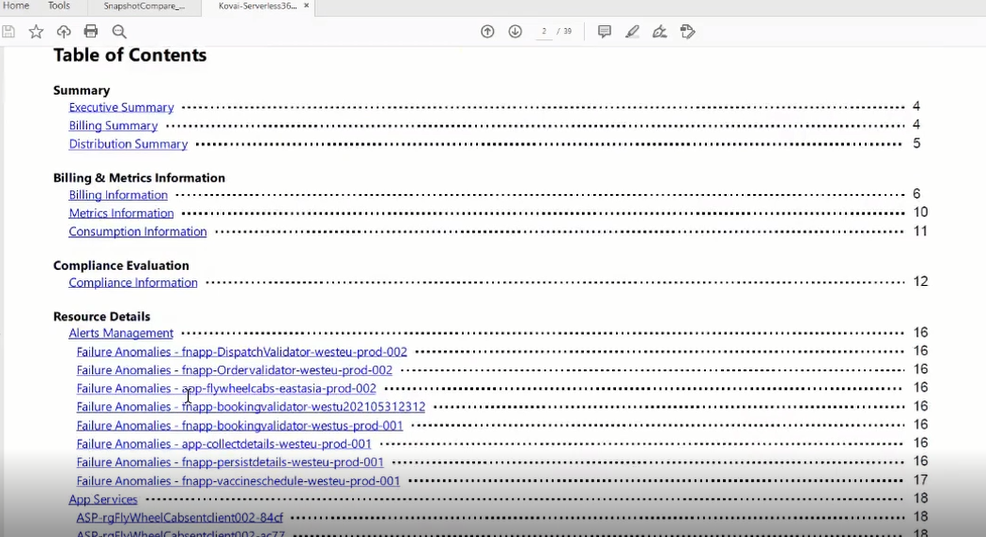

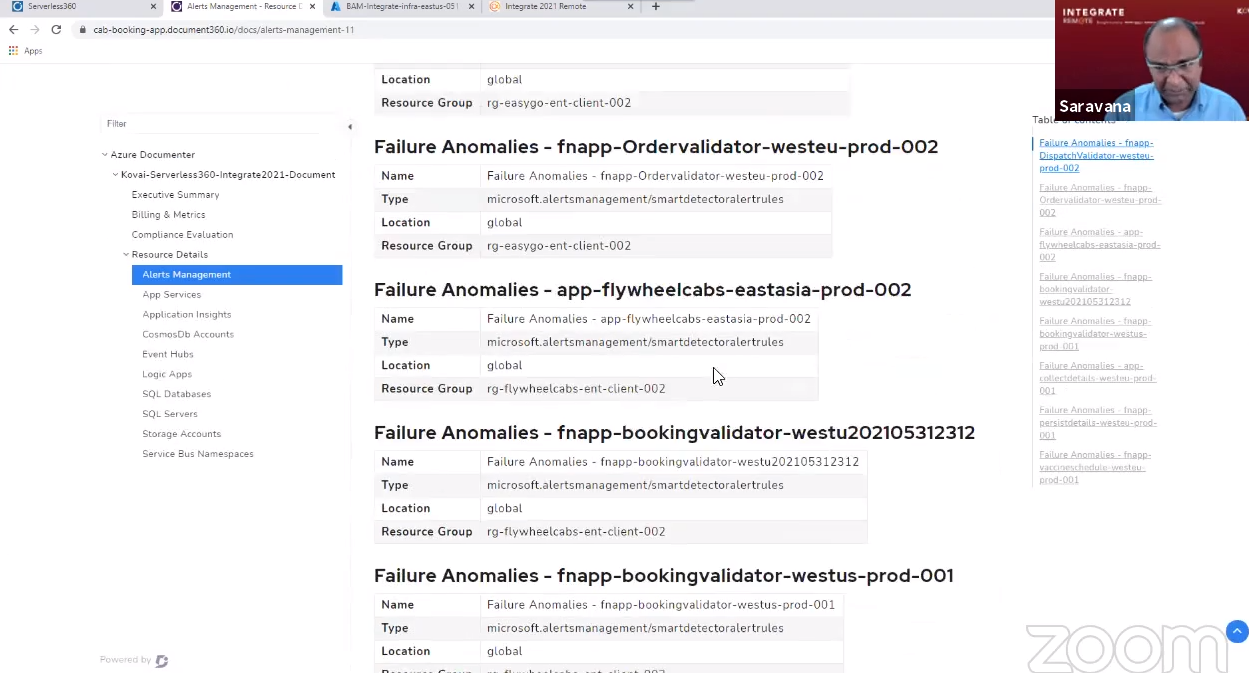

This feature would help the users understand the Azure infrastructure in a layman context. Understanding what is going on in your Subscriptions might be difficult as it is huge and will have so many services running.

It allows you to generate documentation in PDF mode and even publish it online by associating it with one of our other products, Document360.

Here is the Table of Content for a sample 39 Page document that Saravana generated during the session,

He also showed how to generate and publish documentation online using Document360

Key benefits of using Azure Documenter

With all these capabilities, Serverless360 is now being positioned as a portal built to help Azure support teams.

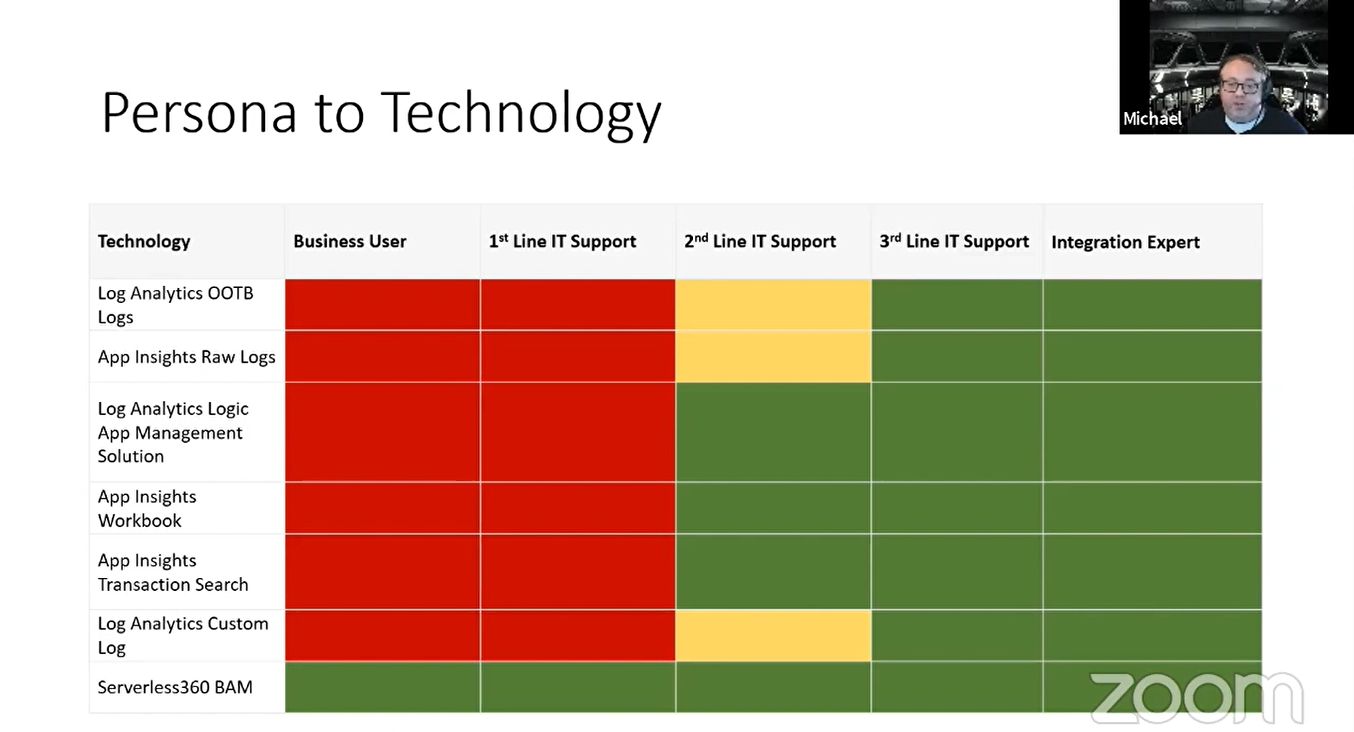

In this session, we had Michael Stephenson, Microsoft MVP and technical consultant for Serverless360, discussing how different monitoring solutions like Log Analytics, Application Insights and BAM can enhance the monitoring experience of Azure Integrations.

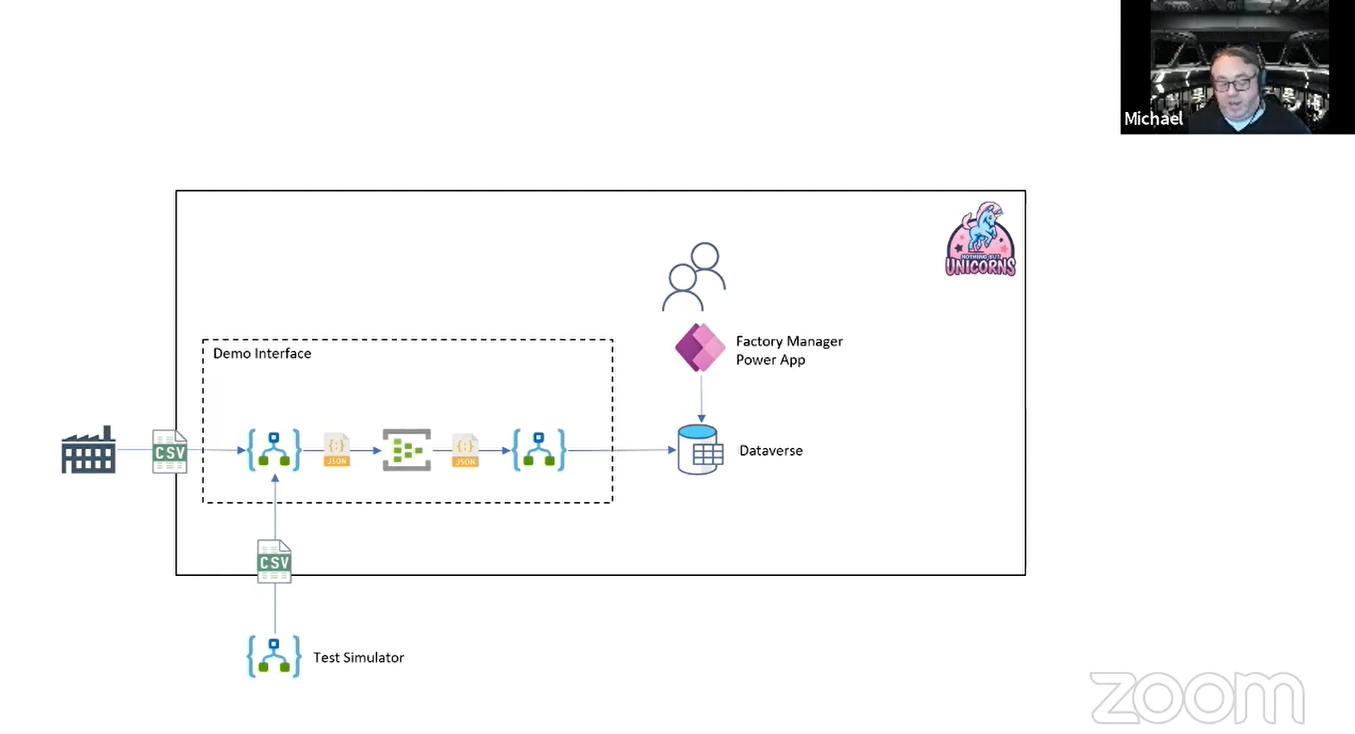

Mike took his own scenario of his company, Nothing but unicorns. Suppliers of this company share the supplier data in the form of CSV. Under the hood, this CSV data processed by different Azure Services and stored in Power Apps as shown below:

To monitor this critical system, Mike chose three different monitoring tools for Azure Integrations that are,

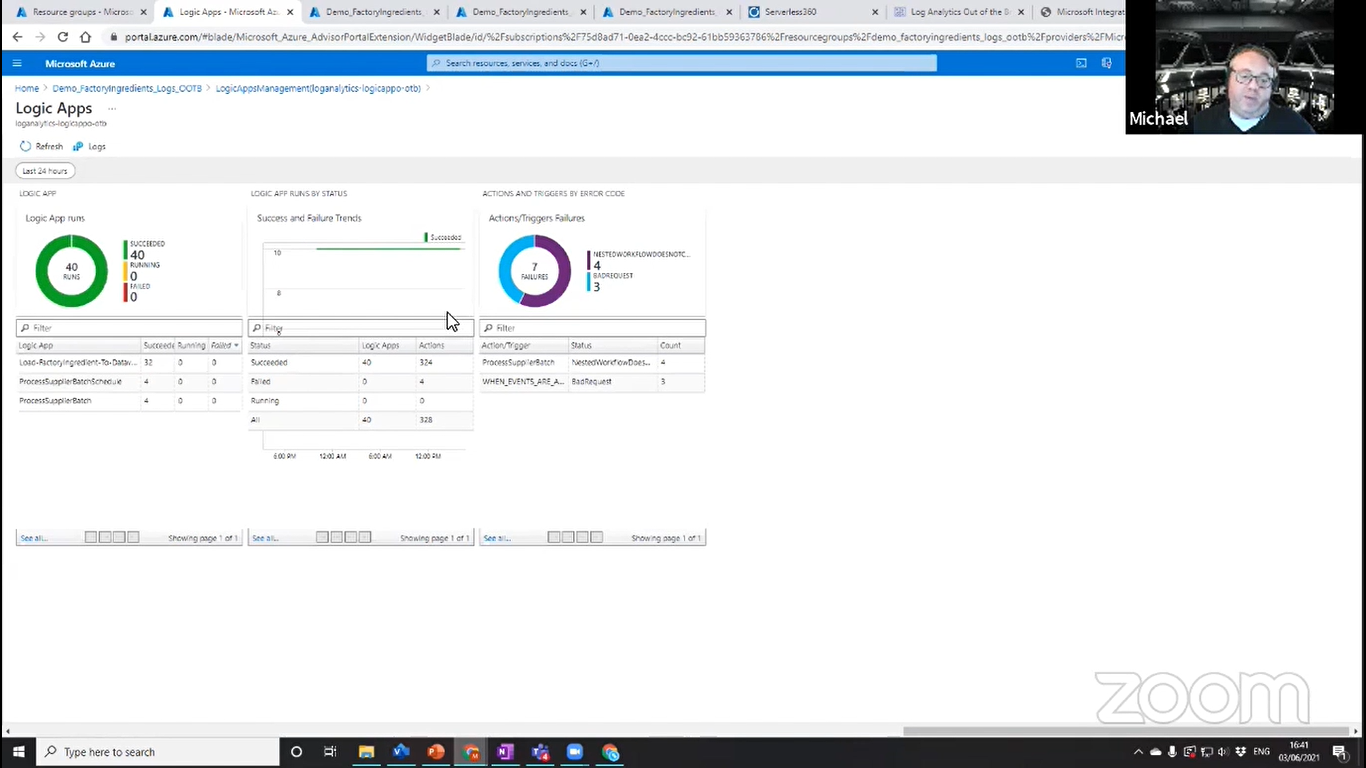

Since this scenario contains multiple Logic Apps, He started with Logic App Management powered by Log Analytics. Logic Management is a workspace on top of Log Analytics that aggregate Logic App data from Log Analytics and better visualise the data. Mike presented how to set up Logic App Management and shared some best practices.

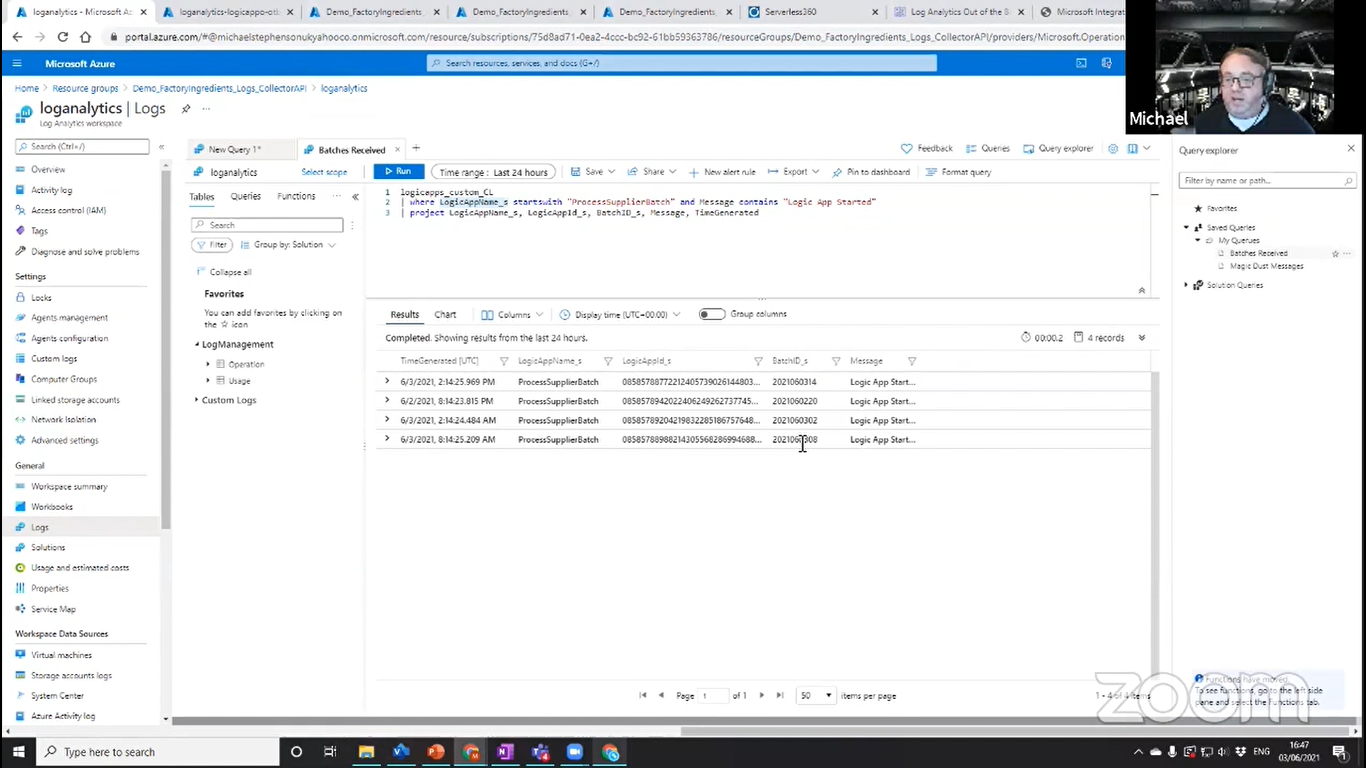

Followed by Logic App Management, Mike move to Log Analytics Data Collector monitoring the same scenario. Log Analytics Data Collector is a workspace offered by Log Analytics that can be used to custom log from different systems. These collected logs can be aggregated in Log Analytics using KQL. Mike also shared the advantages and best practices on KQL

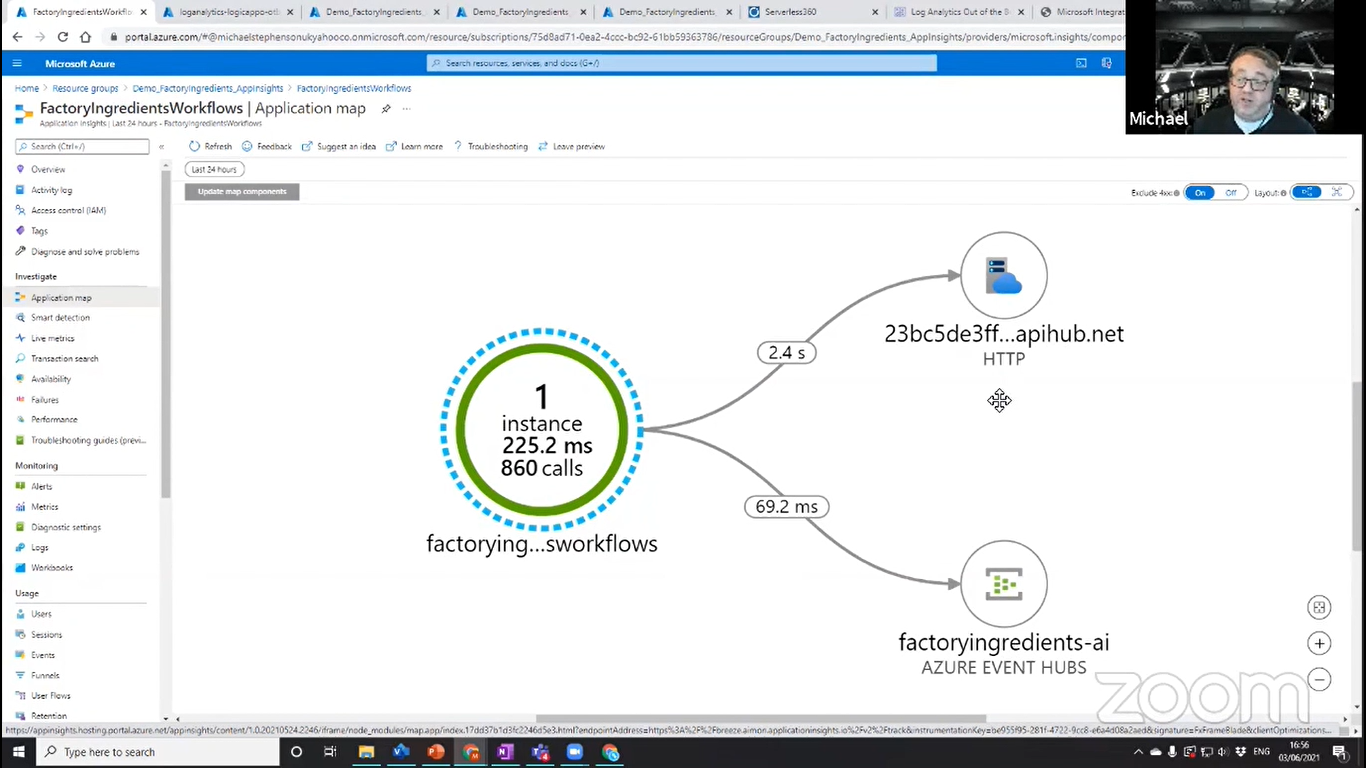

The next demo was on Application Insights. Application Insights is now supported for Logic App Standard since it runs in Function app runtime. It offers various capabilities like Live Monitoring, Application Map, Failure Monitoring and more. This App Insight is also connected with Log Analytics, So user can anytime go and dig deeper for more insights.

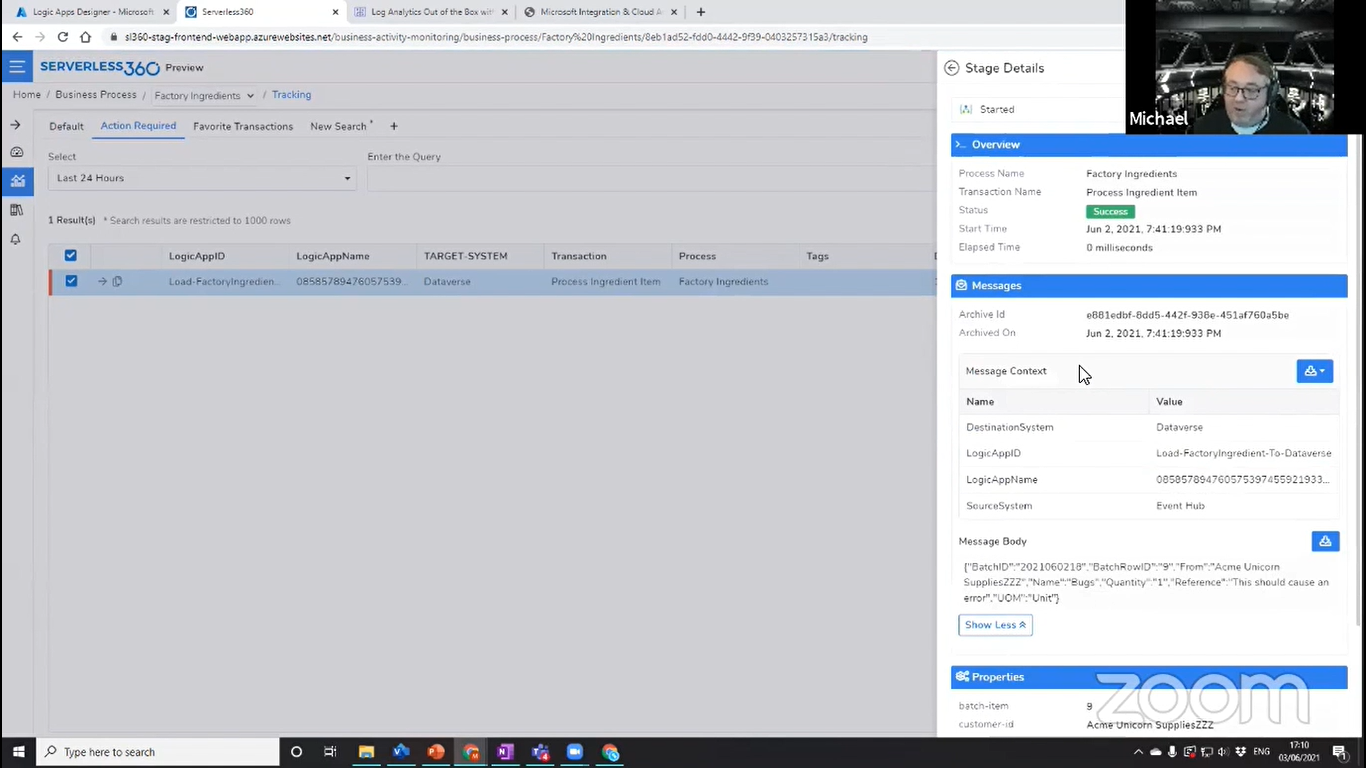

The above tools are more focused on developers and solution architects, but if there is a business user or a support person who would need better insights with a much more intuitive User experience. Here come Serverless360 BAM, an end-to-end tracking solution for your Business process. Mike presented the various capabilities of BAM like Dashboard, Monitoring, Querying and so on and how it improved the business efficiency.

Mike also gave insights into how BAM stands out from other tools for different users in an organisation. And We could clearly see how Serverless360 BAM won the competition from the audience appreciations.

Kevin Lam, Principal Program Manager at the Azure messaging team, joined to provide an informative session on “Event Hubs Updates”.

He covered the session with the following agenda:

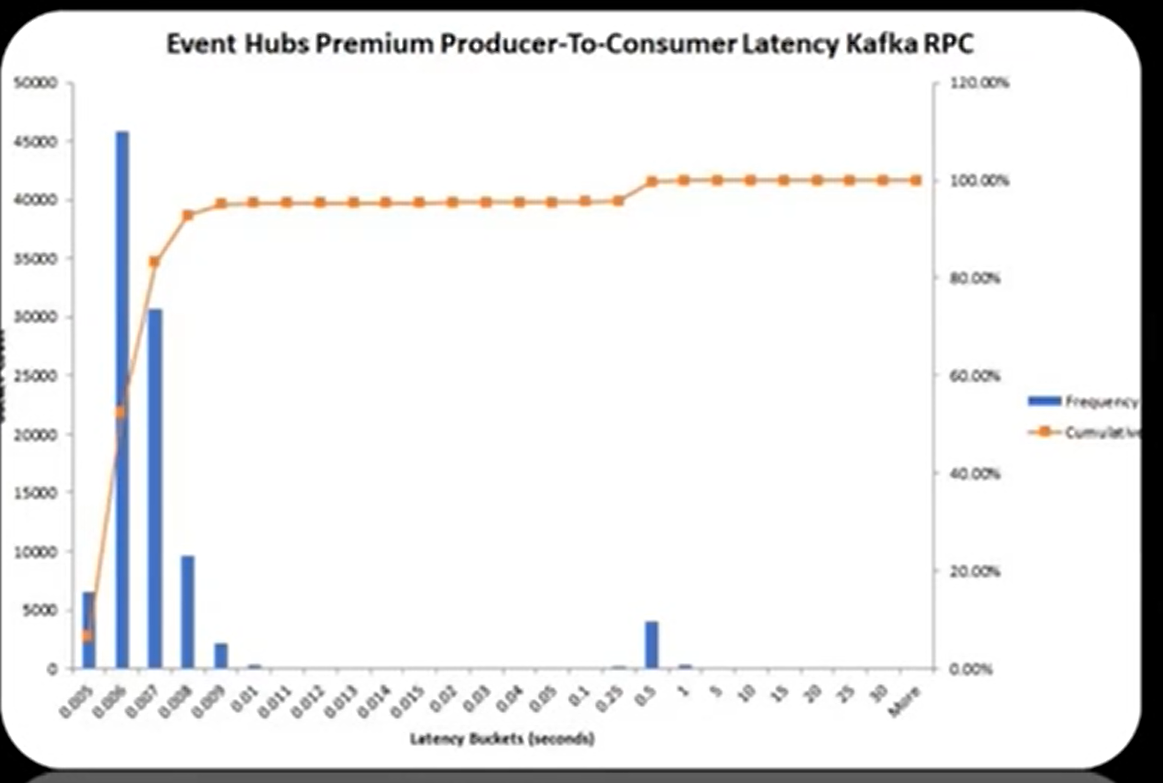

Event Hubs is data streaming platform that streamlines the data pipeline for the users allowing them to catch a better look at the insights received from various locations. It can process millions of events per second with high throughput and low latency.

He emphasized to choose the right messaging services among the suite, because one size doesn’t fit all.

In comparison to Service Bus, Event Hubs has its own distinctive features;

At the ground up, Event Hubs doesn’t run/host Kafka. Perhaps, it implements Kafka protocol head.

Further, Kevin highlighted some of the key and salient features of Event Hubs

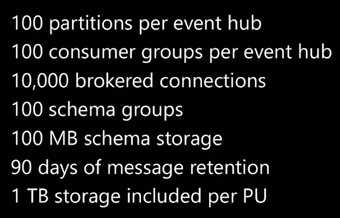

Kevin then explained all the features that are available in premium as follows;

It provides new multi-tiered persistent store while providing triple replication of data across Availability Zone. It has response latency between 6-8ms.

Finally, Kevin shed light on the roadmap of Event Hubs and concluded his talk

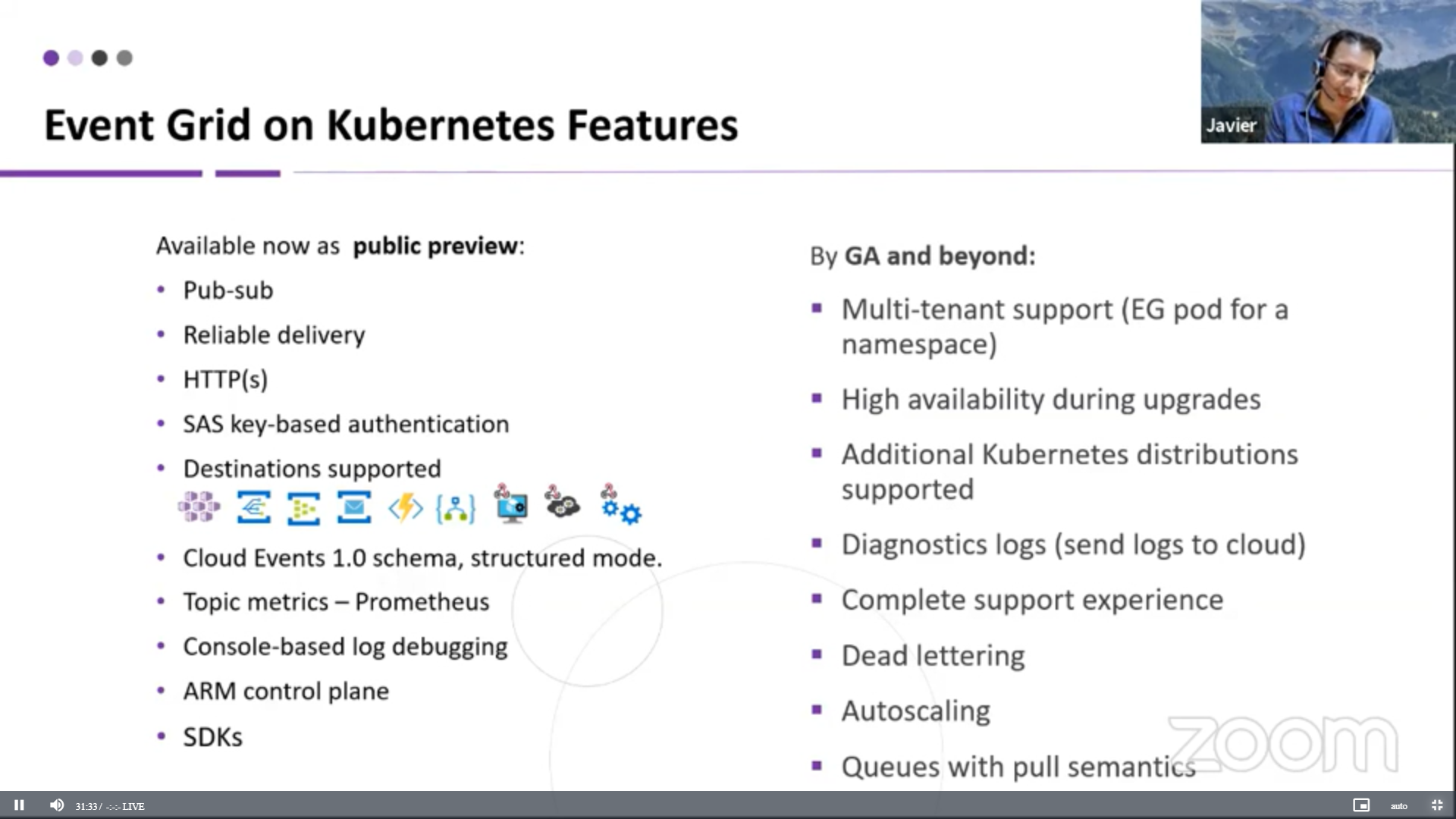

Javier Fernandez, Product Manager – Azure Event Grid, presented a session on Azure Event Grid Updates. Though a closing session of Integrate this session was properly designed and executed with hands-on demos.

The session started with Javier presenting what an Event Grid is and its use cases. Deep dive-in on what an Event is? What is an Event Broker? difference between event publishers Vs event handler and all entities of an Event Grid was also briefed.

Few advantages of using Event Grid:

Javier presented few newly released features that are available in Event Grid now that adds value to users.

Javier also revealed the roadmap for Azure Event Grid which seems promising to add value to business.

Azure Event Grid Update session was a useful session with lot of knowledge sharing on the Future of the resources. As many other products Event Grid is also a request driven product and based on the number of votes a request get, features will be prioritized. If you need to add any feature request or upvote for any feature, please visit Microsoft documentation.

That’s the wrap of the INTEGRATE 2021 Remote.

At this juncture, we would like to extend my sincere thanks to all the attendees, speakers, partners and sponsors for supporting us in successfully running the 2021 edition of INTEGRATE.

We hope to count on your support in the future as well.

This blogpost was prepared by:

Amritha Nishanth Christhini Nadeem Modhana Sri Hari